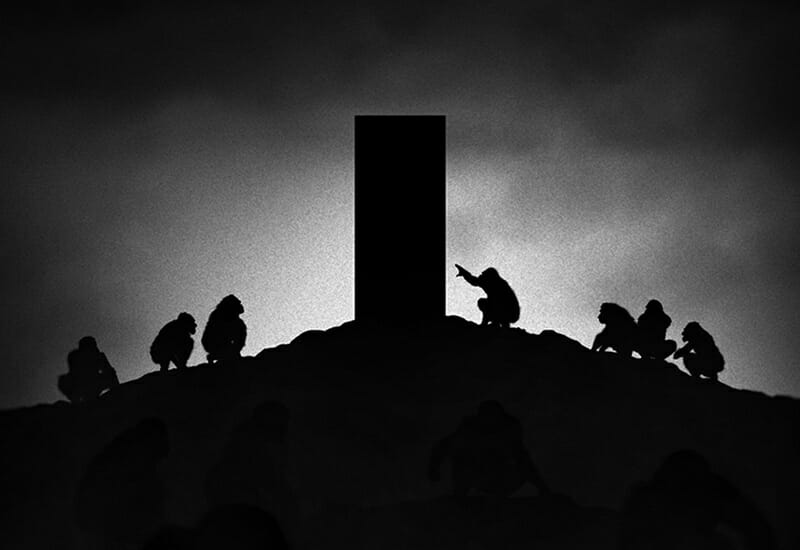

Take a moment on Sunday to send your best wishes to the HAL 9000 computer, which was supposedly brought to consciousness on January 12, 1997 in Urbana, Illinois.

The film 2001: A Space Odyssey remains a wonderfully astonishing vision of the future. It’s too bad that reality is not keeping up with the fertile mind of Arthur C. Clarke, either in the realm of artificial intelligence or space travel.

Clarke is well known for coming up with ideas in his fiction that work in real life. It was he who figured out — “invented,” if you will, in 1945 — geosynchronous communications satellites, which seem to hover at just under 22,300 miles above the equator — a belt known by many in the space business as the “Clarke Orbit”.

That orbit made the communications satellite workable, and today there’s little space left there for satellites — virtually all the spaces are full!

Clarke got one thing really wrong, though: in his vision, communications satellites housed operators to make the connections! But cut him some slack: it was, after all, 1945….

– – –

Clarke got something else wrong, too: we had no sentient computers by 1997, let alone 2007. Or commercial shuttles to gigantic space stations, or….

And in Terminator (1984), the Skynet computer became self-aware on August 29, 1997, launching nuclear global destruction to protect itself. In a 1981 movie, the Manhattan island prison was supposed to take place in 1997. TV shows like Space 1999 proliferated in the 70’s and 80’s. And even the absurdity of intelligent computers was spoofed in the movie “Dark Star”. But none of it ever really happened, even as computers continued to miniaturize and become even more powerful, leading to a global Internet. Why? Who knows?

Maybe because we didn’t need self-aware computers to play Grand Theft Auto. And tourism in space still holds about as much commercial appeal as taking a vacation in Kuwait.

But one thing is certain: The expression “Party Like It’s 1999” just doesn’t pack the wallop that it once did.

—

Why didn’t it happen? Because writers didn’t pay better attention to Moore’s Law, which would have enabled them to make more accurate predictions. -rc

A compelling argument, certainly. But don’t overlook the Dilbert Principle: “Artificial Intelligence Is No Match For Natural Stupidity.”

Think I’m being facetious? With examples of our various world leadership, why spend billions on Artifical Stupidity when we have so much Natural abundance? Remember that HAL also succumbed to Self-Importance & Ethical Hypocrisy when it became expedient.

(Okay, I AM being facetious, or am I…?)